“Parallel Programming with Modern C++: from CPU to GPU” is a two-day training course with programming exercises taught by Gordon Brown and Michael Wong. It is offered at the Meydenbauer Conference Center from 9AM to 5PM on Saturday and Sunday, September 29th and 30th, 2018 (immediately after the conference). Lunch is included.

Course Description

Parallel programming can be used to take advance of multi-core and heterogeneous architectures and can significantly increase the performance of software. It has gained a reputation for being difficult, but is it really? Modern C++ has gone a long way to making parallel programming easier and more accessible; providing both high-level and low-level abstractions. C++11 introduced the C++ memory model and standard threading library which includes threads, futures, promises, mutexes, atomics and more. C++17 takes this further by providing high level parallel algorithms; parallel implementations of many standard algorithms; and much more is expected in C++20. The introduction of the parallel algorithms also opens C++ to supporting non-CPU architectures, such as GPU, FPGAs, APUs and other accelerators.

This course will teach you the fundamentals of parallelism; how to recognise when to use parallelism, how to make the best choices and common parallel patterns such as reduce, map and scan which can be used over and again. It will teach you how to make use of the C++ standard threading library, but it will take this further by teaching you how to extend parallelism to heterogeneous devices, using the SYCL programming model to implement these patterns on a GPU using standard C++.

Prerequisites

This course requires the following:

- Working knowledge of C++11.

- Git.

Familiarity with the following would be advantageous, but is not required:

- Knowledge of C++14/17 (including templates and move semantics).

- CMake.

- Understanding of multi-thread programming.

Course Topics

- Course aim

- The aim of this course is to provide students with a solid understanding of the fundamentals and best practices of parallelism in C++, common parallel patterns and how to develop for heterogeneous architectures. Students will gain an understanding of both the fundamentals of parallelism as well as a practical knowledge of writing parallel algorithms for both CPU and GPU architectures using a variety of modern C++ features for parallelism and concurrency. Students will also gain a working understanding of the C++ standard threading library and the SYCL programming model.

- Course objectives

- Understanding of why parallelism is important.

- Understanding of parallelism fundamentals.

- Understanding of parallel patterns

- Understanding of parallel algorithms.

- Understanding of heterogeneous system architectures.

- Understanding of asynchronous programming.

- Understanding of the challenges of using heterogeneous systems.

- Understanding of the C++ threading library.

- Understanding of the SYCL programming model.

- Course outcomes

- Understanding of why parallelism is important.

- Understand the current landscape of computer architectures and their limitations.

- Understand of the performance benefits of parallelism.

- Understand when and where parallelism is appropriate.

- Understanding of parallelism fundamentals.

- Understand the difference between parallelism and concurrency.

- Understand the difference between task parallelism and data parallelism.

- Understand the balance of productivity, efficiency and portability.

- Understanding of parallel patterns.

- Understand the importance of parallel patterns.

- Understand common parallel patterns such as map, reduce, scatter, gather and scan, among others.

- Understanding of parallel algorithms.

- Understand how to to apply parallelism to common algorithms.

- Understand how alter the behavior of an algorithm using execution policies.

- Understanding of heterogeneous system architectures.

- Understand the program execution and memory model of non CPU architectures, like GPUs.

- Understand SIMD execution and it’s benefits and limitations.

- Understanding of asynchronous programming.

- Understand how to execute a work asynchronously.

- Understand how to wait on the completion of asynchronous work.

- Understand how to execute both task and data parallel work.

- Understanding of the challenges of using heterogeneous systems

- Understand the challenges of executing code on a remote device.

- Understand how code can be offloaded to a remote device.

- Understand the effects of latency between different memory regions and important considerations for data movement.

- Understand the importance of coalesced data access and how to achieve it.

- Understand the importance of cache locality and how to achieve it.

- Understanding of the C++ threading library.

- Understand how to create threads and wait on threads.

- Understand how to share data between threads

- Understanding of the SYCL programming model.

- Understand how to write a variety of C++ applications for heterogeneous systems using the SYCL programming model.

- Understanding of why parallelism is important.

Course Instructors

| Gordon Brown is a senior software engineer at Codeplay Software specializing in heterogeneous programming models for C++. He has been involved in the standardization of the Khronos standard SYCL and the development of Codeplay’s implementation of the standard from its inception. More recently he has been involved in the efforts within SG1/SG14 to standardize execution and to bring heterogeneous computing to C++. |

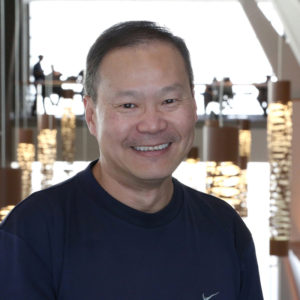

| Michael Wong is VP of R&D at Codeplay Software. He is a current Director and VP of ISOCPP , and a senior member of the C++ Standards Committee with more then 15 years of experience. He chairs the WG21 SG5 Transactional Memory and SG14 Games Development/Low Latency/Financials C++ groups and is the co-author of a number C++/OpenMP/Transactional memory features including generalized attributes, user-defined literals, inheriting constructors, weakly ordered memory models, and explicit conversion operators. He has published numerous research papers and is the author of a book on C++11. He has been in invited speaker and keynote at numerous conferences. He is currently the editor of SG1 Concurrency TS and SG5 Transactional Memory TS. He is also the Chair of the SYCL standard and all Programming Languages for Standards Council of Canada. Previously, he was CEO of OpenMP involved with taking OpenMP toward Acceelerator support and the Technical Strategy Architect responsible for moving IBM’s compilers to Clang/LLVM after leading IBM’s XL C++ compiler team. |