AI++ 201: Building HFT Infrastructure with AI is a two-day onsite training course with programming examples, taught by Jody Hagins. It is offered at the Gaylord Rockies from 09:00 to 17:00 Aurora time (MDT) on Saturday September 19th and Sunday, September 20th, 2026 (immediately following the conference). Lunch is included.

◈ Register Here ◈ See Other Offerings ◈

Course Description

In a previous conference talk, I demonstrated building a complete OPRA feed handler without writing a single line of code by hand. Over the two days of this workshop, you’re going to do something even more difficult.

We’re building a matching engine. Not a toy, one that conforms to IEX specifications, with the kind of IPC infrastructure you’d actually deploy: lock-free queues, seqlock arrays, a sequencer pattern. The works. And you won’t write a single line of code yourself.

Every line comes from Claude Code. You prompt, you guide, you review, you course-correct, but your fingers don’t type C++. By the end, you’ll have a working matching engine and a visceral understanding of what AI can and can’t do with a serious C++ project.

We will first cover the foundations: how agentic loops work (condensed review), and why C++ is uniquely challenging for AI assistants. Compilation latency breaks the fast-iteration feedback loop. Header/source splits multiply context requirements. Templates generate error novels. The agent can write code that compiles but has undefined behavior. We’ll develop strategies for each problem, then begin building.

We will build the necessary pieces: lock-free queues, seqlock arrays, order books, matching logic, end-to-end integration. Everyone codes with their own Claude instance. Results will diverge, but that’s also a great opportunity for learning.

You don’t need previous experience with trading infrastructure.

We are choosing this project, because it is not a toy example, yet modern AI techniques are fully capable of building a functioning system that is at least on par with something that a team of junior engineers could build.

The goal is to learn how to use the AI tools, not write production-grade trading software.

By spending two days in an intense lab setting, attendees gain hands-on experience building real C++ software systems using generative artificial intelligence.

Prerequisites

In order to benefit from this class, you will need:

Professional C++ experience—you should be comfortable writing and debugging C++ code

Basic familiarity with REST APIs and JSON (we won’t teach HTTP or JSON parsing)

An OpenRouter account with API key (free tier is sufficient for the workshop)

A laptop with a working C++ build environment (C++17 or later, CMake)

Willingness to experiment, break things, and discuss discoveries with fellow participants

Starter code is provided that handles API communication and JSON parsing. You’re here to learn how AI agents work, not to debug libcurl.

Course Topics

- The Agentic Loop

- Tool calling, system prompts, the loop

- Live demonstration: watching Claude Code process a task

- For those who took 101: reinforcement; for others: orientation

- Why C++ Is Uniquely Challenging for AI

- Compilation latency breaks the fast-iteration feedback loop

- Header/source splits multiply context requirements

- Template error messages that read like encrypted ransom notes

- Undefined behavior: code that compiles and runs but is broken

- Build system complexity as another layer the agent must navigate

- Strategies for AI-Assisted C++

- smaller changes, compile-after-every-change prompts, fast-compiling test files

- showing both files, explicit instructions about what goes where

- sanitizers in the build, explicit UB checks in prompts, code review patterns

- Explicit API rules

- Multiple task-oriented review agents

- Long Term Memory

- Persistent project context: what the agent knows before you ask anything

- Writing effective CLAUDE.md for C++ projects

- Build commands, code organization, standards, constraints, patterns

- Live exercise: crafting CLAUDE.md for our matching engine

- Matching Engine Overview

- What we’re building: order entry, matching, market data publishing

- IPC architecture: queues for orders and executions, seqlock arrays for NBBO/LULD

- The sequencer pattern

- IEX specification overview

- Building the Foundation

- Project structure generation with Claude Code

- Implementing seqlock primitives

- Comparing results across participants

- First taste of the workflow: prompt, generate, review, course-correct

- Seqlock Arrays for Market Data

- Single writer, multiple reader pattern

- Memory layout for cache efficiency

- Building NBBO and LULD data structures

- Testing concurrent access

- Lock-Free Queues

- Inbound order queue (sequencer input)

- Outbound execution/market data queue

- SPSC vs MPSC design decisions

- Memory ordering choices

- Catching subtle bugs in generated lock-free code

- Order Book Data Structures

- Price levels with price-time priority

- Fast lookup and modification

- Design approaches: let Claude design vs. specify what you want

- Memory layout considerations

- Matching Logic

- New order handling

- Matching against resting orders

- Partial fills

- Order cancellation

- Edge cases the agent will miss (and how to catch them)

- End-to-End Integration

- Connecting all components

- Orders flow in, matches happen, market data flows out

- Testing with sample order scenarios

- Performance profiling

- Collective Code Review

- What did we build? Is it production-ready?

- Best code Claude generated

- Worst code Claude generated

- Bugs caught, bugs almost missed

- Comparing participant implementations

- Automated Code Generation

- Determinism with scripts

- Prevent the “Not my fault” lie all LLMs tell

- Manage agents that work 24/7

- Patterns and Takeaways

- Prompting strategies that consistently produced good C++

- Review practices that caught the most bugs

- When to let Claude iterate vs. when to intervene

- The matching engine is yours—keep building after the workshop

Course Instructor

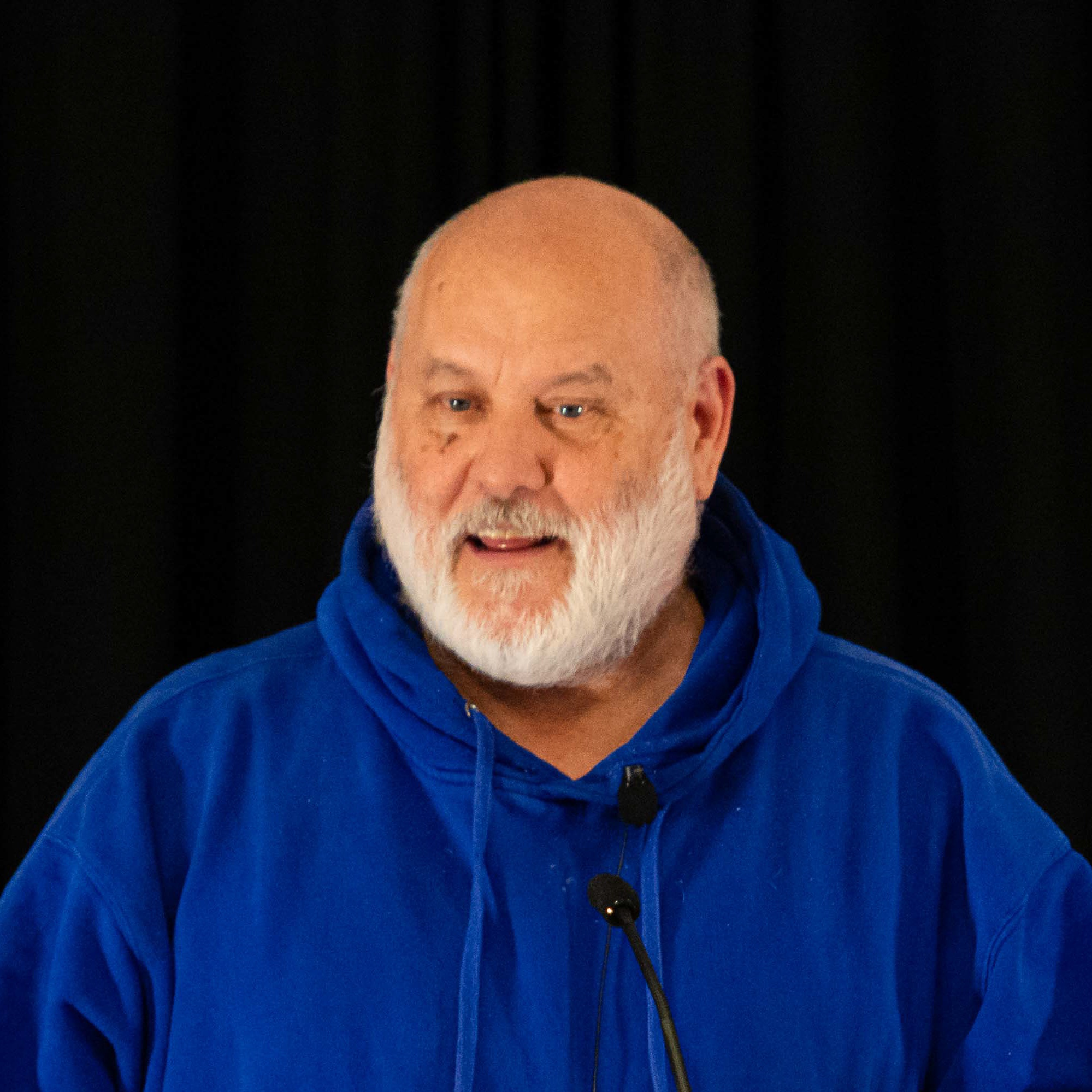

Jody Hagins a Director at LSEG, working on high-frequency trading infrastructure, including feed handlers, normalization processes, and low-latency systems. His background spans four decades, from graduate work in AI during the 1980s (Lisp, Smalltalk, model-based reasoning) through Unix kernel development in C, to modern C++ for financial systems.

After approaching generative AI with healthy skepticism born of living through two AI winters, Jody discovered that the tools had finally caught up, but only for those who understand how to use them effectively. His 2026 talk, “Meet Claude, Your New HFT Infrastructure Engineer,” demonstrated building a complete OPRA feed handler without writing code by hand.

Jody has spoken at numerous C++ conferences on a wide range of C++ topics.